Dimensions of Data Quality (8 Core Dimensions): A Guide to Data Excellence

Poor data quality directly translates to futile decision-making that can cause millions in lost opportunities and inefficient operations.

This is the sole reason why a comprehensive understanding and implementation of data quality dimensions are important. It’s not just an option anymore — it’s a business imperative.

This article will equip you to:

- How to assess and measure each dimension of data quality

- Practical strategies for implementing data quality improvements

- Case studies

- Common pitfalls and how to avoid them

- Tools and techniques for maintaining data quality over time

What is Data Quality?

Data quality refers to the degree to which data can aid a business in effective operations, insightful decision-making, and planning. It is the measure of how well data reflects reality and supports business objectives.

Think of it like the foundation of your business. Those who prioritize data quality turn out more successful in their digital transformation initiatives and strategic decision-making processes.

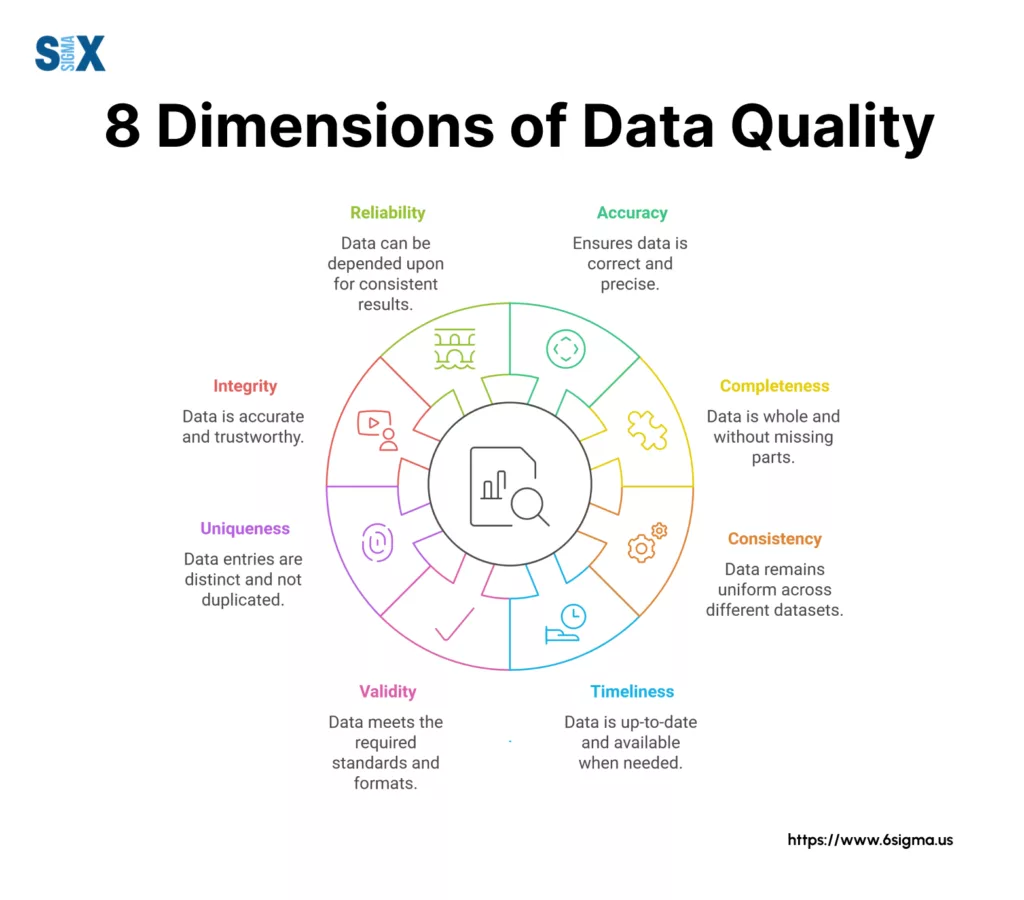

The 8 Core Dimensions of Data Quality

Understanding and managing the dimensions of data quality is crucial for business success.

Accuracy: The Foundation of Data Quality

Data accuracy measures how correctly your data represents real-world entities or events. In Six Sigma terms, we’re looking at the deviation between recorded values and actual values.

Example: A healthcare provider discovered their patient medication records had a 2.3% error rate – potentially dangerous in medical settings. By implementing automated validation systems and double-entry protocols, they reduced this to 0.01%.

Measurement: Use statistical sampling and verification against trusted sources. I typically recommend:

- Random sampling of 5-10% of records

- Cross-verification with original documents

- Automated validation rules

- Regular accuracy audits

Best Practices:

- Implement automated data entry validation

- Establish clear data entry protocols

- Regular staff training on data accuracy

- Periodic data accuracy audits

Want to Ensure Your Data Quality Measurements are Reliable?

Join our Measurement System Analysis (MSA) program to learn how to establish trustworthy measurement systems. Learn the art of measurement systems analysis to validate your data collection processes and achieve the kind of accuracy improvements.

Completeness: Ensuring No Critical Data is Missing

Data completeness refers to having all required data present and usable. Develop a comprehensive framework for measuring and improving data completeness.

Example: A telecommunications client was struggling with customer churn analysis because 30% of their customer profile data was incomplete. They implemented mandatory field requirements and automated data collection, improving completeness to 98% and reducing churn by 23%.

Measurement:

- Percentage of populated mandatory fields

- Ratio of complete records to total records

- Impact analysis of missing data

Best Practices:

- Define mandatory vs. optional fields clearly

- Implement real-time completeness checks

- Automate data collection where possible

- Regular completeness audits

Consistency: Maintaining Uniformity Across Systems

Data consistency ensures uniform representation of information across all systems and processes. Consistency is what turns data into a universal language for your organization.

Example: A global retail chain found their customer addresses were formatted differently across their CRM, shipping, and billing systems. By implementing standardized formatting rules, they reduced shipping errors by 42% and saved $2.3 million annually.

Measurement:

- Cross-system data comparison rates

- Format standardization compliance

- Consistency violation tracking

- Regular reconciliation reports

Best Practices:

- Implement enterprise-wide data standards

- Use automated format validation tools

- Regular cross-system audits

- Establish clear data governance policies

Timeliness: Ensuring Data Availability When Needed

Timeliness measures how current your data is and whether it’s available when needed for decision-making.

Example: A semiconductor manufacturer was making pricing decisions based on market data that was 48 hours old. By implementing near-real-time data updates, they improved pricing accuracy by 28% and increased margins by 12%.

Measurement:

- Time lag between data creation and availability

- Update frequency metrics

- Real-time availability rates

- System response times

Best Practices:

- Implement automated data refresh cycles

- Monitor data processing delays

- Establish update frequency requirements

- Regular timeliness audits

Validity: Ensuring Data Meets Business Rules

Data validity measures how well data conforms to its required format, type, and range rules.

Example: A major insurance company found 13% of policy numbers didn’t match the required format, causing claim processing delays. Implementing automated validation rules reduced this to 0.3%, saving 2,800 processing hours monthly.

Measurement:

- Format compliance rates

- Business rule validation scores

- Data type consistency checks

- Range rule compliance

Best Practices:

- Implement automated validation rules

- Regular format compliance audits

- Clear documentation of business rules

- Automated error detection and reporting

Uniqueness: Eliminating Costly Duplicates

Data uniqueness ensures that each real-world entity is represented exactly once in your dataset. Every duplicate record is a potential point of failure in your decision-making process.

Example: A retailer discovered that 12% of their customer records were duplicates, leading to redundant marketing efforts and customer frustration. By implementing robust deduplication protocols and unique identifier systems, they reduced duplicate records to 0.1% and improved customer satisfaction scores by 34%.

Measurement:

- Duplicate detection rates

- Cross-system duplication checks

- Temporal duplication patterns

- Merge success rates

Best Practices:

- Implement robust validation rules

- Deploy real-time duplicate detection

- Establish clear data entry standards

- Maintain comprehensive audit trails

Integrity: Ensuring Data Relationships Remain Intact

Data integrity ensures that relationships between different data elements remain accurate and maintained throughout the data lifecycle. Think of data integrity as the trust factor in your data relationships.

Example: A business solutions provider discovered that 7% of their customer orders weren’t properly linked to inventory records, causing fulfillment delays. By implementing referential integrity constraints and relationship validation rules, they improved order processing efficiency by 34%.

Measurement:

- Referential integrity violation rates

- Relationship consistency checks

- Foreign key validation scores

- Cross-reference accuracy rates

Best Practices:

- Implement database constraints

- Regular relationship audits

- Automated integrity checking

- Clear documentation of data relationships

Reliability: Building Trust in Your Data

Data reliability measures how consistently your data produces expected results and maintains its quality over time. It’s what I call the “sleep well at night” factor for data-driven decisions.

Example: At a financial institution, inconsistent reporting data was causing board-level trust issues. By implementing reliability metrics and continuous monitoring, they achieved 99.97% reliability in critical financial reports, restoring stakeholder confidence.

Measurement:

- Consistency over time

- Error rate trends

- Reliability scoring

- Usage confidence metrics

Best Practices:

- Implement continuous monitoring

- Regular reliability assessments

- Clear reliability standards

- Stakeholder feedback loops

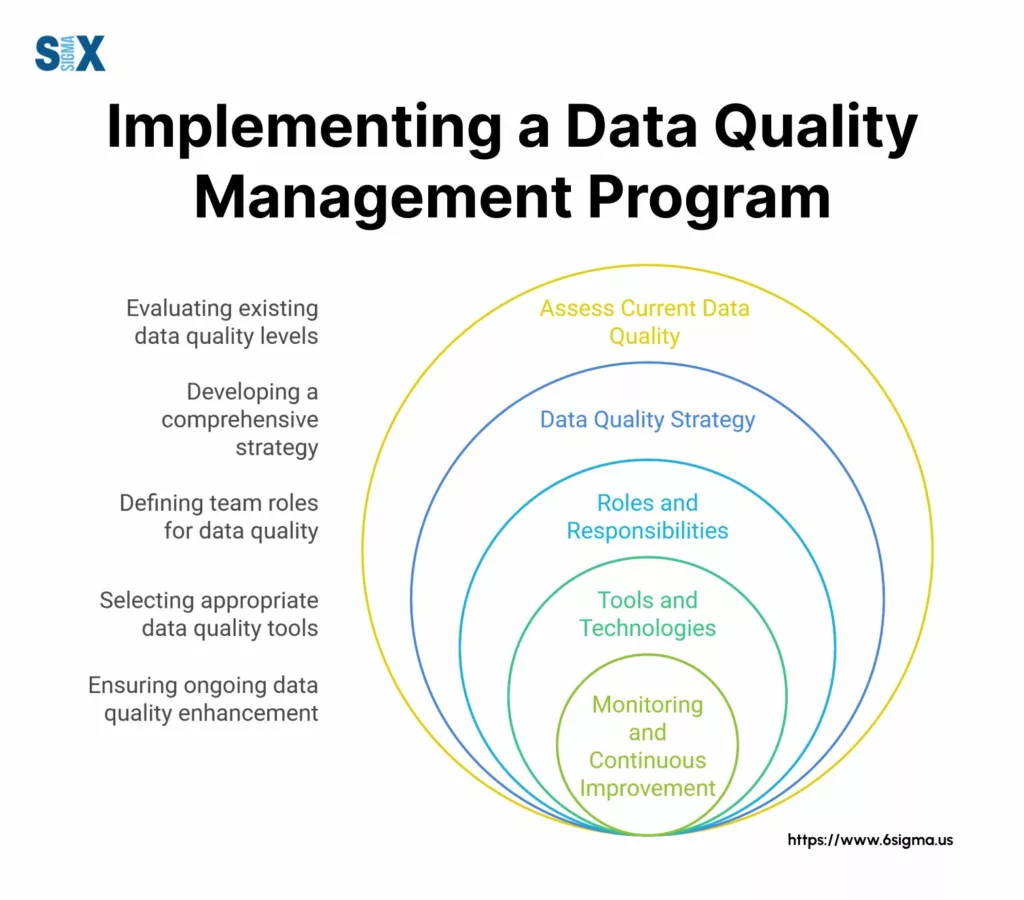

Implementing a Data Quality Management Program

The real challenge lies in implementing an effective data quality management program. Let me share a framework that can help organizations transform their data quality initiatives.

Steps to Assess Current Data Quality

I recommend starting with a comprehensive assessment approach:

1. Baseline Measurement

- Conduct a data quality audit across all eight dimensions

- Use statistical sampling techniques to evaluate current state

- Document pain points and their business impact

- Establish quantitative baseline metrics

2. Gap Analysis

- Compare current state against industry benchmarks

- Identify critical data elements and their quality requirements

- Prioritize improvement opportunities based on business impact

- Document resource requirements and constraints

Creating a Data Quality Strategy

Your data quality strategy should align with broader business objectives.

1. Strategic Planning

- Define clear, measurable objectives

- Establish realistic timelines

- Allocate necessary resources

- Set specific improvement targets

2. Implementation Roadmap

- Phase improvements based on priority

- Define quick wins vs. long-term initiatives

- Establish clear milestones

- Create contingency plans

Roles and Responsibilities

There is a mandatory need for clear accountability. Here’s the organizational structure I recommend:

1. Executive Sponsor

- Provides strategic direction

- Ensures resource availability

- Removes organizational barriers

- Champions the initiative

2. Data Quality Team

- Data Quality Manager

- Data Stewards

- Technical Specialists

- Business Process Owners

3. Stakeholder Groups

- Business Users

- IT Teams

- Compliance Officers

- External Partners

Tools and Technologies

I’ve identified several essential tools for data quality management:

1. Monitoring Tools

- Real-time quality monitoring

- Automated validation systems

- Performance dashboards

- Alert mechanisms

2. Analysis Tools

- Statistical analysis software

- Data profiling tools

- Root cause analysis systems

- Reporting platforms

Monitoring and Continuous Improvement

The success of any data quality program lies in continuous monitoring and improvement.

1. Regular Assessment

- Weekly quality metrics review

- Monthly trend analysis

- Quarterly performance reviews

- Annual strategic assessment

2. Improvement Cycles

- Use DMAIC methodology

- Implement corrective actions

- Monitor results

- Adjust strategies as needed

Want to Build Quality into Your Data Systems from the Ground Up?

Our DFSS Green Belt course teaches you how to deploy Voice of the Customer through design, manage risk, and ensure success using Design Scorecards – essential skills for implementing the data quality frameworks. Join our 3-day program to master these critical design tools.

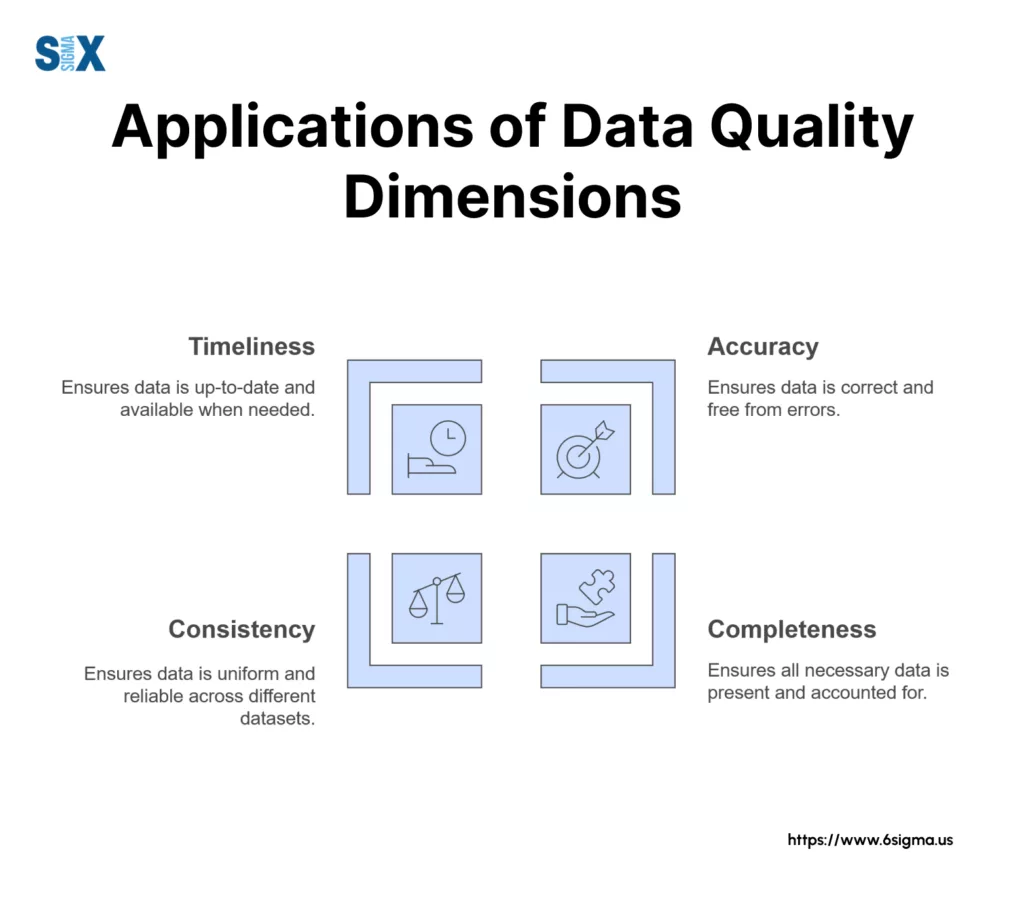

Applications of Data Quality Dimensions

I’ve observed that while the core dimensions of data quality remain constant, their application and importance vary significantly by sector.

Healthcare: Ensuring Patient Data Accuracy for Proper Treatment

Data quality directly impacts patient outcomes. In one notable project, we discovered that 2.3% of patient medication records contained critical inaccuracies.

Critical Focus Areas:

- Patient identification accuracy

- Medical history completeness

- Treatment record consistency

- Real-time data availability

Implementation: At a 500-bed hospital system, they implemented a comprehensive data quality framework that resulted in:

- 99.99% patient identification accuracy

- 47% reduction in medication errors

- 82% improvement in treatment record completeness

- $3.2 million annual savings in administrative costs

Best Practices:

- Automated data validation at point of entry

- Regular cross-reference checks

- Real-time data synchronization

- Comprehensive audit trails

Finance: Maintaining Data Integrity for Regulatory Compliance

My experience working with major financial institutions has taught me that data quality isn’t just about operational efficiency—it’s about regulatory compliance and risk management.

Key Considerations:

- Transaction accuracy

- Audit trail completeness

- Real-time data consistency

- Regulatory reporting precision

Implementation: A global investment bank developed a data quality framework that achieved:

- 99.999% transaction accuracy

- 100% regulatory compliance

- 73% reduction in reporting errors

- 4-hour reduction in end-of-day processing time

Critical Controls:

- Automated reconciliation processes

- Multi-level validation checks

- Real-time compliance monitoring

- Comprehensive data lineage tracking

Retail: Improving Customer Data Quality for Personalized Marketing

Organizations can leverage high-quality data to transform their customer experience. During a project with a major retail chain, we found that poor data quality was causing a 23% loss in marketing effectiveness.

Focus Areas:

- Customer profile accuracy

- Purchase history completeness

- Preference data consistency

- Real-time behavior tracking

Results Achieved:

- 34% improvement in marketing ROI

- 28% increase in customer satisfaction

- 42% reduction in marketing waste

- $4.7 million annual revenue increase

Implementation Strategy:

- Customer data validation frameworks

- Multi-channel data integration

- Real-time data quality monitoring

- Automated preference updates

Manufacturing: Ensuring Data Consistency for Supply Chain Optimization

I’ve developed specialized approaches for maintaining data quality in complex supply chain environments.

Critical Elements:

- Inventory accuracy

- Production data consistency

- Supplier data quality

- Real-time tracking precision

Implementation: At a global manufacturing company, their data quality initiative delivered:

- 99.7% inventory accuracy

- 42% reduction in stockouts

- 67% improvement in supplier delivery performance

- $8.2 million annual cost savings

Implementation Framework:

- Automated data capture systems

- Real-time validation protocols

- Cross-system consistency checks

- Integrated quality monitoring

Best Practices

1. Industry-Specific Metrics

- Define industry-relevant KPIs

- Establish appropriate benchmarks

- Create sector-specific measurement systems

2. Regulatory Compliance

- Understand industry regulations

- Implement compliance monitoring

- Maintain audit readiness

3. Stakeholder Engagement

- Identify industry-specific stakeholders

- Develop targeted communication plans

- Regular feedback mechanisms

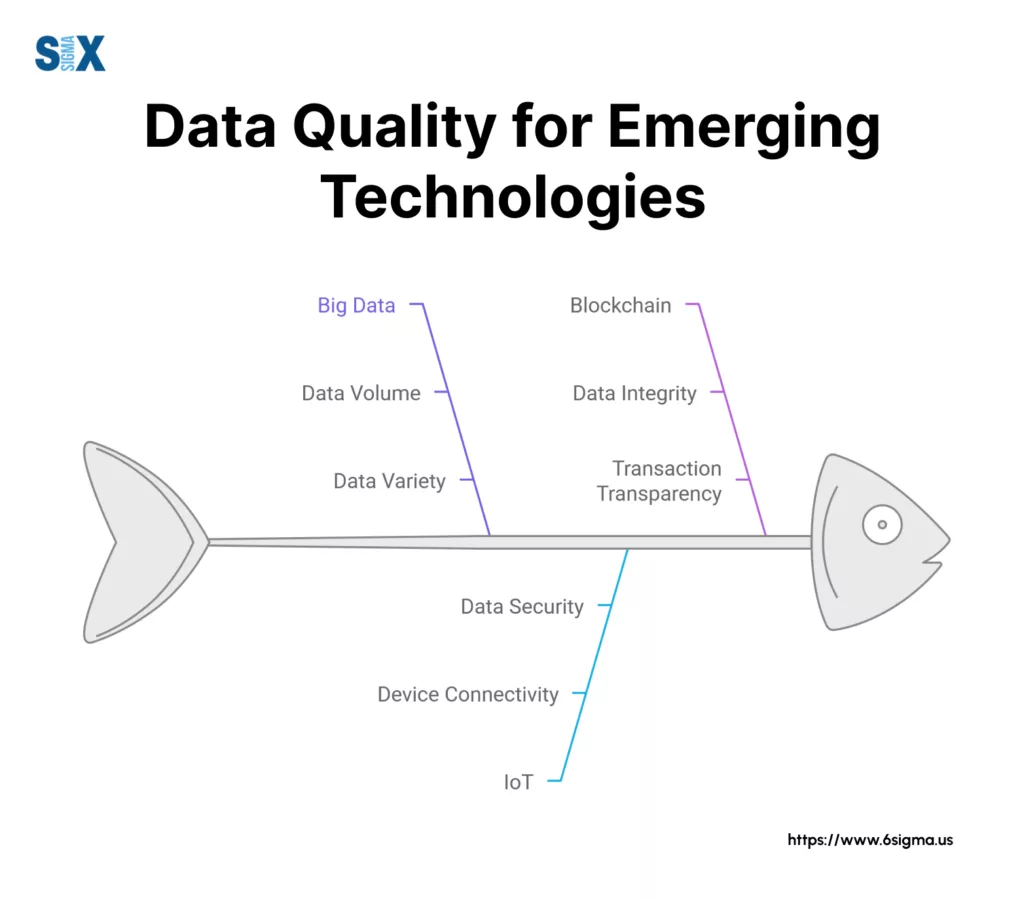

Data Quality for Emerging Technologies

Emerging technologies have fundamentally transformed how we approach the dimensions of data quality.

Big Data: Handling Volume, Velocity, and Variety While Maintaining Quality

A major telecommunications company faced the challenge of managing data quality across 50 petabytes of customer interaction data. Traditional data quality approaches simply weren’t sufficient.

Key Challenges: Volume Management

- Processing 1 million+ transactions per second

- Real-time quality validation

- Storage optimization while maintaining integrity

Implementation Strategy: A scalable approach was developed that:

- Distributed validation frameworks

- Automated quality monitoring

- Parallel processing for quality checks

- Statistical sampling techniques

Results:

- 99.99% data accuracy at scale

- 67% reduction in processing time

- 42% improvement in data utilization

- $5.3 million annual cost savings

Internet of Things (IoT): Ensuring Accuracy and Timeliness of Sensor Data

IoT implementations fail due to poor data quality. Here’s the framework to prevent such failures:

Key Focus Areas: Sensor Data Quality

- Real-time validation

- Edge computing quality checks

- Network reliability

- Data synchronization

Implementation Example: A smart manufacturing facility:

- Implemented edge computing quality checks

- Reduced data latency by 89%

- Improved sensor accuracy to 99.99%

- Achieved real-time quality monitoring

Quality Control Measures:

- Automated sensor calibration

- Real-time data validation

- Edge processing protocols

- Redundancy systems

Blockchain: Maintaining Data Integrity in Distributed Ledger Systems

For financial institutions implementing blockchain solutions, here’s a specialized approach for ensuring data quality in distributed environments:

Critical Considerations: Consensus Mechanisms

- Data validation protocols

- Smart contract quality

- Network integrity

- Transaction verification

Implementation Example: A supply chain operation:

- Achieved 100% transaction traceability

- Reduced verification time by 92%

- Eliminated data tampering

- Improved supplier compliance by 67%

Implementation Framework: Quality Gates

- Entry point validation

- Smart contract testing

- Network health monitoring

- Regular audits

Emerging Technology Best Practices

1. Scalable Architecture

- Design for growth

- Automated quality checks

- Distributed processing

- Real-time monitoring

2. Integration Strategy

- Cross-platform validation

- API quality control

- System synchronization

- Error handling protocols

3. Security Measures

- Data encryption

- Access control

- Audit trails

- Compliance monitoring

Future Considerations

As these technologies evolve, I anticipate several key trends in data quality management:

1. Automated Quality Management

- AI-driven quality control

- Self-healing systems

- Predictive quality monitoring

- Autonomous validation

2. Enhanced Integration

- Cross-platform quality standards

- Unified monitoring systems

- Automated synchronization

- Real-time validation

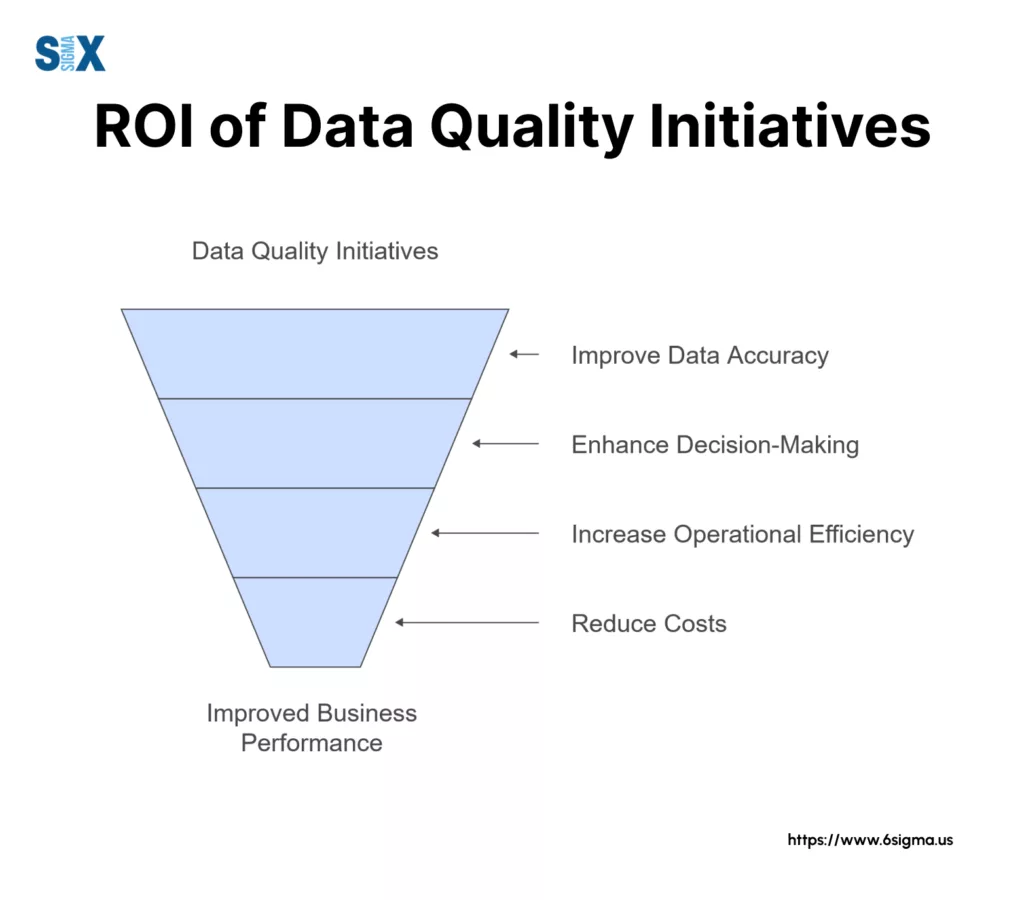

ROI of Data Quality Initiatives

One of the most common questions I encounter is, “What’s the return on investment for improving data quality dimensions?”

Case Study: Data Quality Transformation at a Global Manufacturing Corporation

We implemented a comprehensive data quality improvement program that yielded remarkable results. Here’s how we approached it:

Initial Situation:

- 23% error rate in inventory data

- $12 million annual losses from quality issues

- 45-minute average decision-making delay

- 67% trust level in data reports

Implementation Strategy:

1. Assessment Phase

- Conducted comprehensive data quality audit

- Identified critical pain points

- Established baseline metrics

- Developed improvement roadmap

2. Solution Implementation

- Automated data validation

- Real-time quality monitoring

- Staff training programs

- Process standardization

Results:

- 96% reduction in data errors

- $8.7 million annual cost savings

- 5-minute average decision time

- 98% data trust level

- 342% ROI within 18 months

Calculating the Financial Impact of Poor Data Quality

Here’s a framework for quantifying the cost of poor data quality:

Direct Costs:

1. Verification and Validation

- Manual data checking: $50-150 per hour

- Rework costs: 20-35% of employee time

- Validation software: $100-500K annually

2. Error Resolution

- Investigation time: $75-200 per incident

- Customer service impact: $25-150 per case

- Lost productivity: 15-25% of staff time

Indirect Costs:

1. Missed Opportunities

- Delayed decisions: $10K-1M per incident

- Lost customers: $500-5000 per customer

- Regulatory penalties: $10K-1M per violation

2. Reputation Damage

- Customer trust erosion: 5-15% revenue impact

- Brand value decrease: 3-7% market cap impact

- Employee satisfaction: 20-30% productivity impact

Long-term Benefits of Investing in Data Quality

There are several key long-term benefits:

1. Operational Efficiency

- ~ 40-60% reduction in processing time

- ~ 25-45% decrease in operational costs

- ~ 70-90% reduction in manual data validation

- ~ 30-50% improvement in resource utilization

2. Strategic Advantages

- Faster decision-making capability

- Improved customer satisfaction

- Enhanced competitive position

- Better regulatory compliance

3. Organizational Benefits

- Improved employee satisfaction

- Enhanced stakeholder confidence

- Better risk management

- Increased innovation capability

Measuring Long-term ROI

Here’s a framework for calculating long-term ROI:

1. Initial Investment

- Technology infrastructure: 30-40% of budget

- Training and development: 15-25%

- Process improvement: 20-30%

- Change management: 15-25%

2. Ongoing Costs

- Maintenance and updates: 10-15% annually

- Continuous training: 5-10% annually

- Quality monitoring: 8-12% annually

3. Returns Timeline

- Short-term (0-6 months): Cost reduction

- Medium-term (6-18 months): Efficiency gains

- Long-term (18+ months): Strategic advantages

Turn your Data Quality Insights into Measurable Capabilities

Our Introduction to Capability course will teach you proven methods for analyzing and improving process performance. Learn how to conduct capability to reduce error rates and drive cost savings.

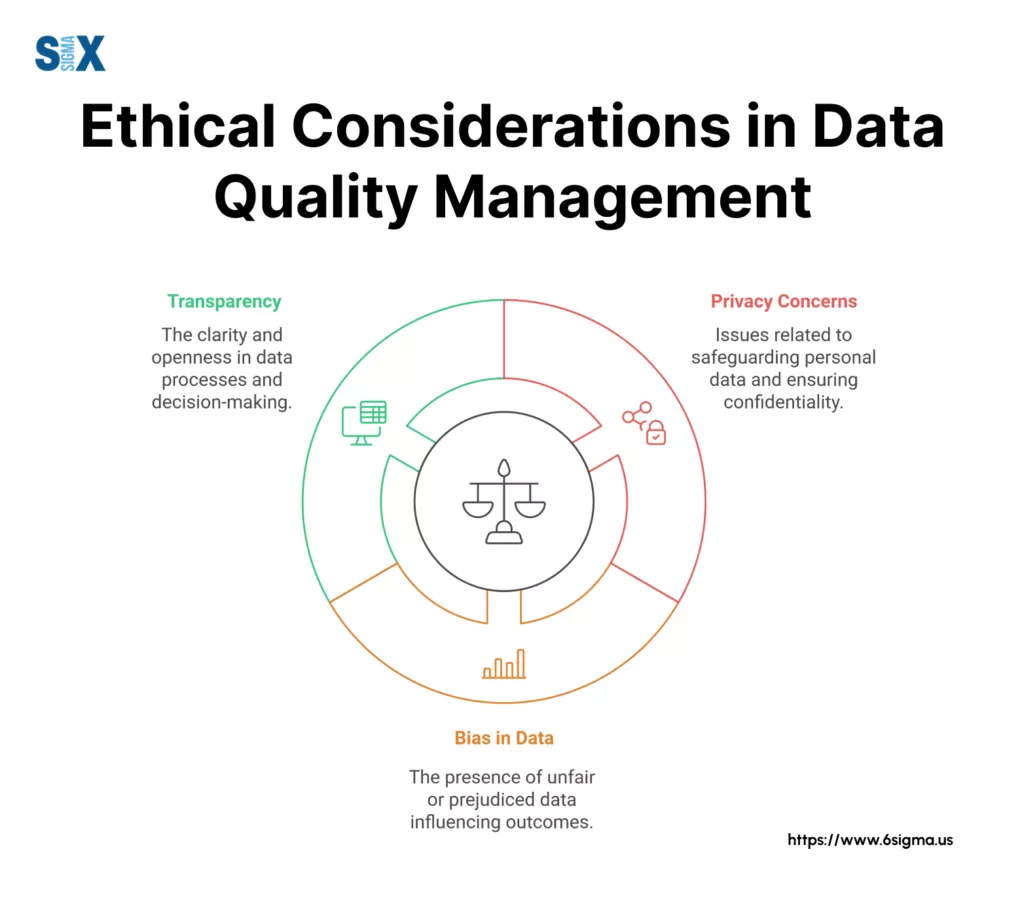

Ethical Considerations in Data Quality Management

Ethical considerations are just as crucial as technical aspects. Overlooking the ethical implications of data quality management could lead to serious consequences beyond just operational issues.

Privacy Concerns in Data Collection and Validation

Here’s a comprehensive framework for addressing privacy concerns while maintaining high data quality standards.

Key Privacy Considerations: Data Collection Ethics

- Informed consent protocols

- Minimal data collection principle

- Purpose limitation

- Data retention policies

Implementation Strategy:

1. Privacy by Design

- Built-in privacy controls

- Automated anonymization

- Access control matrices

- Audit trail systems

2. Validation Protocols

- Privacy-preserving validation techniques

- Secure data sampling methods

- Encrypted quality checks

- Protected reference data

Bias in Data and Its Impact on Decision-Making

I’ve seen how unconscious bias in data can significantly impact business decisions. Here’s how you can address this critical issue:

Types of Data Bias:

1. Collection Bias

- Sampling errors

- Selection bias

- Temporal bias

- Geographic bias

2. Processing Bias

- Algorithm bias

- Confirmation bias

- Measurement bias

- Interpretation bias

Mitigation Strategies:

1. Bias Detection

- Statistical analysis tools

- Regular bias audits

- Diverse data sources

- Cross-validation techniques

2. Bias Prevention

- Diverse team composition

- Standardized processes

- Regular training programs

- Independent reviews

Transparency in Data Quality Processes

Here’s a transparency framework that balances openness with security:

Key Components:

1. Process Documentation

- Clear quality metrics

- Documented procedures

- Decision criteria

- Change management logs

2. Stakeholder Communication

- Regular quality reports

- Process explanations

- Impact assessments

- Feedback mechanisms

Best Practices for Ethical Data Quality Management:

1. Governance Framework

- Ethics committee oversight

- Regular compliance audits

- Clear accountability

- Documented policies

2. Training and Awareness

- Regular ethics training

- Privacy awareness programs

- Bias recognition workshops

- Transparency protocols

3. Monitoring and Review

- Ethics impact assessments

- Regular privacy audits

- Bias detection systems

- Transparency metrics

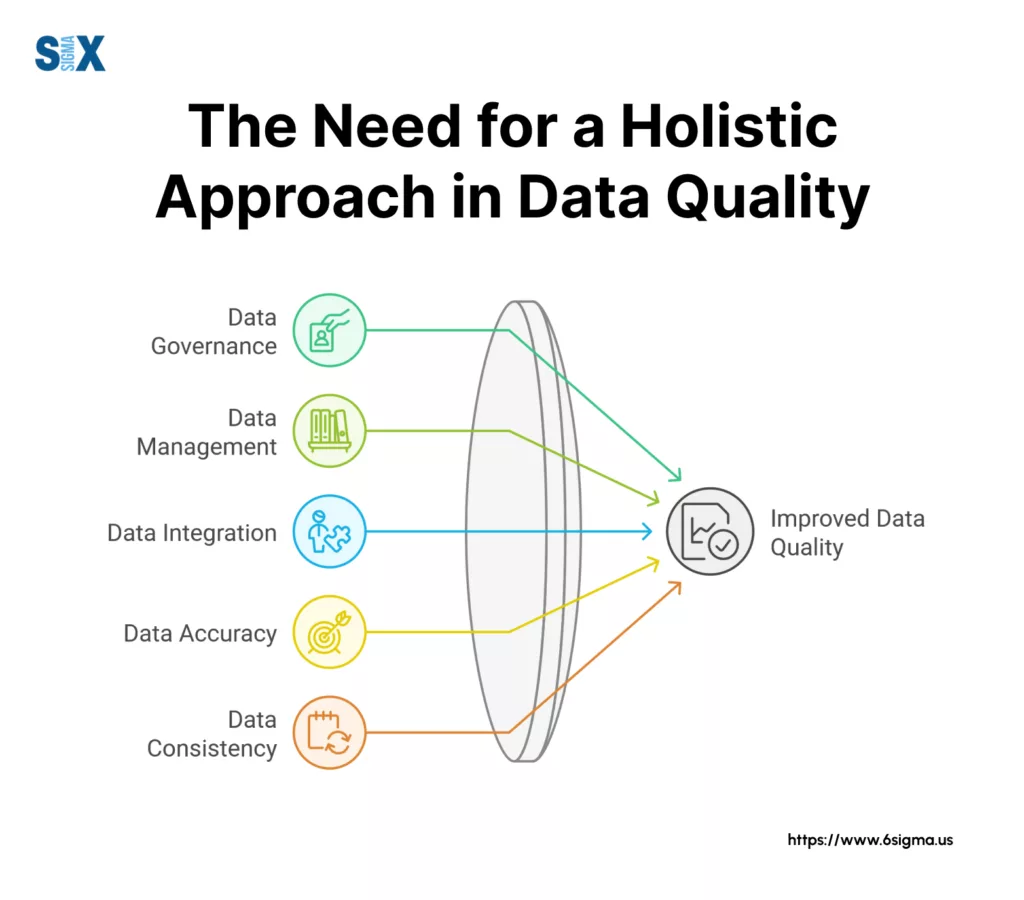

The Need for a Holistic Approach – Dimensions of Data Quality

Successful data quality management requires a comprehensive approach:

1. Strategic Integration

- Align data quality with business objectives

- Implement cross-functional governance

- Establish clear ownership and accountability

- Develop comprehensive measurement systems

2. Cultural Transformation

- Build a data-aware organization culture

- Foster a continuous improvement mindset

- Encourage cross-departmental collaboration

- Establish clear communication channels

3. Technical Excellence

- Deploy appropriate tools and technologies

- Implement automated monitoring systems

- Establish clear quality metrics

- Maintain robust documentation

Going Ahead

As we look to the future, I urge organizations to take decisive action on data quality:

1. Immediate Steps

- Assess current data quality levels

- Identify critical data elements

- Establish baseline metrics

- Develop improvement roadmap

2. Medium-term Goals

- Implement automated quality controls

- Establish governance frameworks

- Deploy monitoring systems

- Train staff on quality protocols

3. Long-term Vision

- Build a sustainable quality culture

- Achieve continuous improvement

- Maintain competitive advantage

- Drive innovation through quality data

Without solid data quality foundations, it becomes highly difficult to achieve successful digital transformation.

The question isn’t whether to invest in data quality, but rather how quickly you can begin the journey toward data excellence.

Start your data quality journey today. Your organization’s future success may depend on it.

SixSigma.us offers both Live Virtual classes as well as Online Self-Paced training. Most option includes access to the same great Master Black Belt instructors that teach our World Class in-person sessions. Sign-up today!

Virtual Classroom Training Programs Self-Paced Online Training Programs