The Ultimate Guide to Data Quality Testing: Ensure Reliable and Actionable Insights

Data quality is key to making effective decisions and for overall business growth. The lack of data quality or poor data quality can often derail even the most promising business initiatives.

That’s why, a systematic approach is required to validate and verify the data’s accuracy, completeness, and of course reliability.

Let’s look at how data quality testing can truly change how your business operations and decisions can be turned profitable.

This article will equip you to:

- Drive decisions with confidence, backed by information and sound data

- Bring down operations costs and reduce inefficiencies

- Improve customer satisfaction through accurate data management

- Ensure regulatory compliance and risk management

- Drive continuous improvement initiatives effectively

From my statistical background and extensive work in process improvement, I’ve learned that data quality isn’t just about having clean data – it’s about having reliable information that drives confident decision-making.

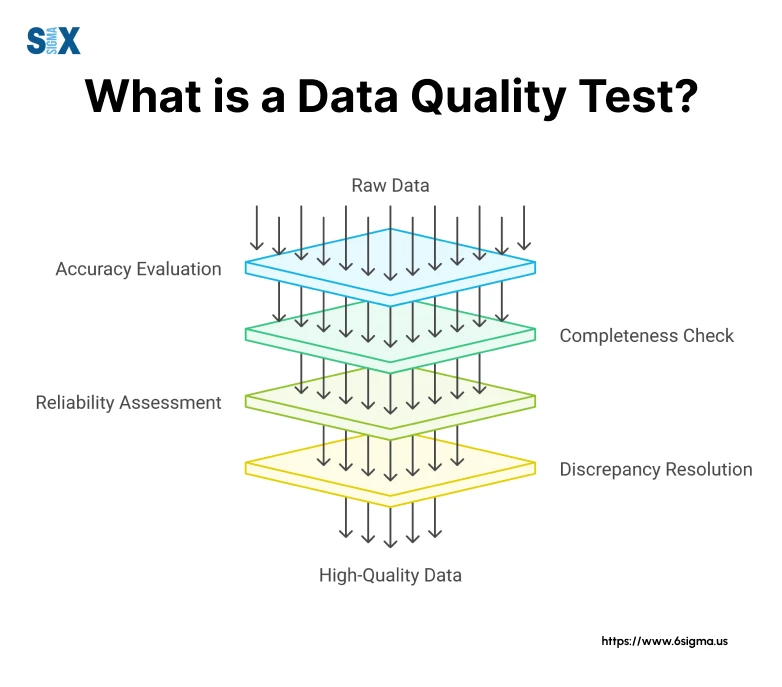

What is a Data Quality Test?

A data quality test is a systematic procedure for evaluating whether data meets specified quality standards and business requirements.

There are several essential types of data quality tests:

- Structural Tests: Verify data format and composition

- Content Tests: Evaluate the actual values within the data

- Relationship Tests: Examine connections between different data elements

- Business Rule Tests: Ensure compliance with specific business requirements

The 5 Key Criteria for Data Quality Testing

I’ve found these five criteria to be crucial for comprehensive data quality testing:

1. Accuracy

A small change in data accuracy can lead to significant cost savings. Accuracy testing ensures that data values correctly represent the real-world conditions they measure.

2. Completeness

Missing data is more dangerous than having no data. Completeness testing verifies that all required data elements are present and populated.

3. Consistency

Inconsistent data across systems can derail entire projects. Consistency testing ensures uniform data representation across different platforms and processes.

4. Timeliness

Outdated data could lead to costly decisions. Timeliness testing verifies that data is available when needed and reflects current conditions.

5. Validity

Validating the data is crucial to ensure that the data meets the specified business rules and regulatory requirements.

The Role of Data Quality Testing in Data Management

It serves as the foundation for:

- Data Governance: Ensures alignment with organizational policies and standards

- Process Improvement: Identifies opportunities for enhancing data collection and handling

- Risk Management: Mitigates potential issues before they impact business operations

The relationship between data quality testing and data governance is particularly crucial. Effective data quality testing supports governance by:

- Establishing clear quality standards

- Providing measurable metrics for compliance

- Creating accountability in data management processes

- Supporting continuous improvement initiatives

Data Quality Testing Methods: Leveraging Six Sigma for Optimal Results

Choosing the right testing methods can make the difference between success and failure.

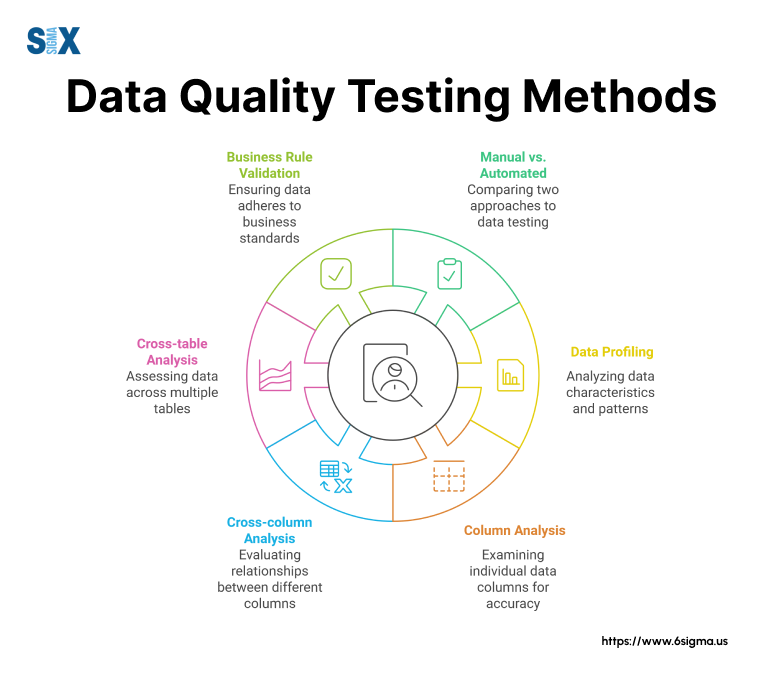

Manual vs. Automated Testing Approaches

I’ve seen data quality testing evolve from purely manual processes to sophisticated automated systems. Each approach has its place in a comprehensive testing strategy.

Manual Testing Advantages:

- Allows for intuitive pattern recognition

- Provides a deeper contextual understanding

- Enables flexible investigation of anomalies

Manual testing particularly is effective for:

- Initial data exploration

- Complex business rule validation

- Investigation of unusual patterns

Automated Testing Benefits:

- Scales efficiently across large datasets

- Ensures consistent application of rules

- Provides real-time monitoring capabilities

Common Data Quality Testing Techniques

Here are several key testing techniques that consistently deliver results:

1. Data Profiling

A comprehensive data profiling approach includes:

- Statistical distribution analysis

- Pattern recognition

- Outlier identification

- Relationship mapping

2. Column Analysis

This technique involves:

- Data type verification

- Value range validation

- Format consistency checks

- Null value analysis

3. Cross-column Analysis

Cross-column analysis is used to:

- Validate business rules

- Identify correlations

- Verify logical relationships

- Ensure data consistency

4. Cross-table Analysis

Cross-table analysis is essential for:

- Referential integrity validation

- Data relationship verification

- End-to-end process validation

5. Business Rule Validation

Effective business rule validation must:

- Align with organizational policies

- Reflect regulatory requirements

- Support business objectives

- Enable process improvement

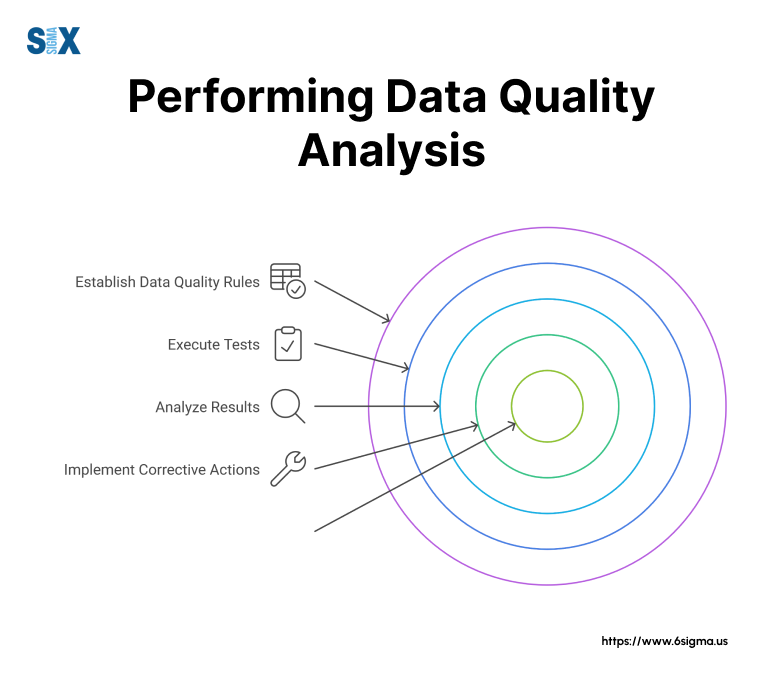

Step-by-Step Guide to Performing Data Quality Analysis

Here’s a proven approach to performing effective data quality analysis.

Define Data Quality Objectives

- Set specific quality targets

- Define measurement criteria

- Align with business objectives

Identify Critical Data Elements

- Map data dependencies

- Prioritize critical elements

- Assess business impact

- Document data relationships

Establish Data Quality Rules

- Creating clear validation criteria

- Defining acceptable thresholds

- Documenting test procedures

- Establishing monitoring protocols

Execute Tests

- Run tests in a controlled environment

- Document all test results

- Track anomalies and exceptions

- Maintain test logs

Analyze Results

- Apply root cause analysis

- Identify patterns and trends

- Quantify the impact of issues

- Prioritize corrections

Implement Corrective Actions

- Develop targeted solutions

- Test fixes before implementation

- Monitor the impact of changes

- Document improvements

Monitor and Repeat

- Regular testing cycles

- Ongoing performance monitoring

- Periodic review of rules

- Adjustment of thresholds as needed

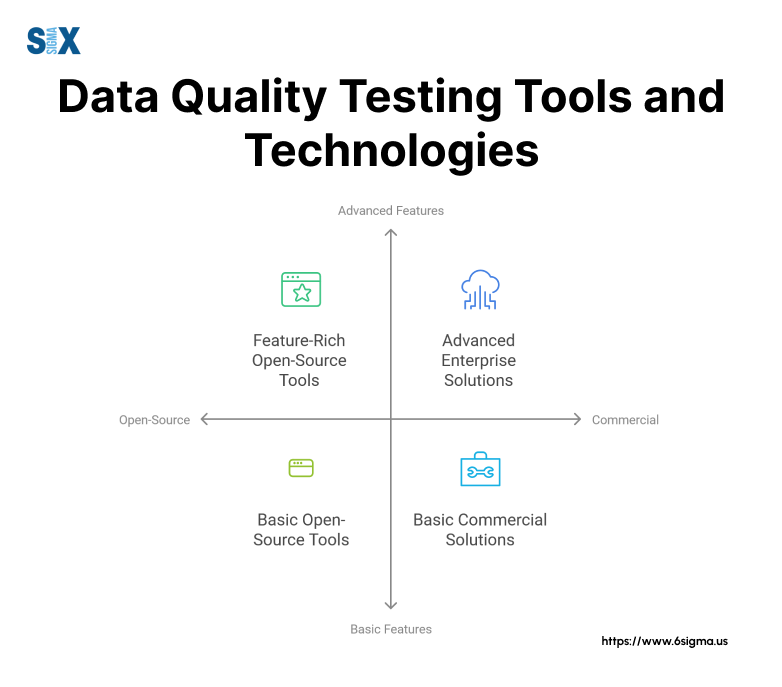

Data Quality Testing Tools and Technologies

I’ve evaluated and implemented numerous data quality tools, both open-source and commercial. Based on real-world implementations, here’s my assessment.

Open-Source Options:

- Great Expectations: You can use this framework for Python-based data validation

- Apache Griffin: You can use this for big data quality management

- dbt (data build tool): At HP, we leveraged this for SQL-based testing and documentation

Commercial Solutions:

- Informatica Data Quality: You can use it as an enterprise-scale solution

- IBM InfoSphere Information Server: This proves invaluable for complex data quality scenarios

- Talend Data Quality: This is particularly effective for mid-sized organizations

Comparing Top Data Quality Tools

Based on my experience implementing these tools across various industries, here’s what you should consider:

Features:

- Data profiling capabilities

- Automated validation rules

- Real-time monitoring

- Customizable workflows

- Reporting and analytics

Pricing:

- Enterprise solutions: $100K+ annually (full feature set)

- Mid-tier options: $25K-$100K annually

- Open-source: Free but requires technical expertise

Ease of Use:

- Business user-friendly interfaces

- Technical complexity requirements

- Learning curve considerations

- Available support and documentation

Integration Capabilities:

- Database connectivity

- API availability

- Cloud platform support

- ETL tool integration

Turn Your Data Quality Insights into Actionable Results?

Master Minitab, the essential software for data analysis and quality improvement. Our Minitab Essentials course will help you create meaningful charts, perform basic data analysis, and validate data quality.

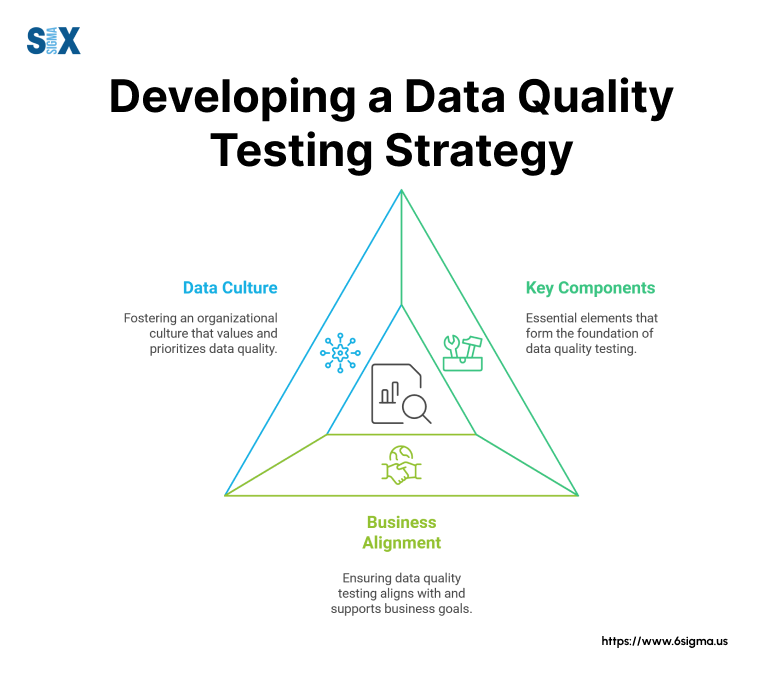

Developing a Data Quality Testing Strategy: Lessons from the Field

Success depends on a well-structured approach aligned with organizational objectives. Here’s a framework that can consistently deliver results.

Key Components of a Robust Strategy

The essential elements of an effective data quality testing strategy are:

1. Clear Governance Structure

- Defined roles and responsibilities

- Established decision-making protocols

- Created accountability mechanisms

- Set performance metrics

2. Standardized Testing Procedures

- Test case development guidelines

- Quality metrics definitions

- Documentation requirements

- Review and approval processes

3. Resource Allocation

- Technical infrastructure

- Skilled personnel

- Training programs

- Tools and technologies

Aligning Data Quality Testing with Business Objectives

Successful data quality testing strategies must directly support business goals. You can achieve this by:

1. Identifying Critical Business Processes

- Mapping data dependencies

- Prioritizing testing efforts

- Establishing quality thresholds

- Defining success criteria

2. Setting Measurable Objectives

- Define specific quality targets

- Establish measurement systems

- Create monitoring mechanisms

- Implement feedback loops

3. Developing ROI Metrics

- Cost savings from error prevention

- Efficiency improvements

- Risk reduction

- Customer satisfaction impact

Building a Data Quality Culture

One of the most crucial steps is the importance of cultural transformation. You can successfully build a data quality culture by:

1. Executive Sponsorship

- Securing leadership commitment

- Demonstrating business value

- Allocating necessary resources

- Celebrating successes

2. Training and Education

- Role-specific training programs

- Hands-on practical exercises

- Regular knowledge sharing

- Continuous improvement initiatives

3. Communication Strategy

- Regular status updates

- Success story sharing

- Challenge discussion forums

- Progress visualization

Remember, developing an effective data quality testing strategy isn’t a one-time effort. It requires continuous refinement and adaptation. The key is to create a framework that’s both robust and flexible, capable of evolving with your organization’s needs while maintaining consistent quality standards.

Industry-Specific Data Quality Challenges and Solutions

Each industry faces unique challenges requiring tailored solutions.

Healthcare Data Quality

Let’s look at the critical challenges and best practices:

Unique Challenges:

- Patient data accuracy and completeness

- Integration of diverse medical systems

- Compliance with HIPAA regulations

- Real-time data accessibility

Best Practices:

- Implement automated validation checks for patient identifiers

- Establish standardized data entry protocols

- Create robust audit trails

- Deploy real-time quality monitoring systems

Financial Services

Data quality testing in this sector requires particular attention to regulatory compliance and accuracy.

Regulatory Requirements:

- Meeting Basel III reporting requirements

- Ensuring SOX compliance

- Maintaining GDPR standards

- Supporting risk management protocols

Strategies for Financial Data Integrity:

- Deploy automated reconciliation systems

- Implement multi-level validation checks

- Establish clear data lineage

- Create comprehensive audit trails

E-commerce

My work with online retailers has revealed unique challenges in managing high-volume, real-time data quality.

Data Quality Issues:

- Product catalog accuracy

- Customer data integration

- Inventory synchronization

- Transaction data reliability

Improvement Techniques:

- Implement real-time data validation

- Deploy automated catalog management systems

- Establish customer data verification protocols

- Create integrated quality monitoring dashboards

Successful data quality testing in any industry requires a combination of industry-specific knowledge and proven quality methodologies. The key is adapting Six Sigma principles to address each sector’s unique challenges while maintaining consistent quality standards.

Case Studies: Impact of Data Quality Testing

Let’s look at some case studies that demonstrate the tangible benefits of robust data quality management.

Case Study 1: Improving Customer Experience at a Global Technology Company

A technology company tackled a significant customer experience challenge stemming from data quality issues. The company was experiencing:

- 15% customer contact information error rate

- Delayed shipping due to address inconsistencies

- Multiple customer profiles causing communication issues

By implementing comprehensive data quality testing:

- Reduced contact information errors to <1%

- Improved delivery accuracy by 23%

- Consolidated customer profiles, leading to 35% better engagement rates

- Achieved $2.3M annual savings in shipping costs

The key to success was implementing automated data quality checks at every customer touchpoint.

Case Study 2: Cost Savings Through Data Quality Management at a Manufacturing Giant

An MNC operating in industry, worker safety, and consumer goods had a project that transformed its inventory management through enhanced data quality testing:

- Initial state: 12% inventory discrepancy rate

- $5M annual losses from incorrect stock levels

- Production delays due to data inaccuracies

The solution included:

- Implementing real-time data validation

- Establishing automated quality checks

- Creating cross-system data reconciliation processes

Results:

- Reduced inventory discrepancies to 0.5%

- Saved $4.2M annually

- Improved production efficiency by 18%

Case Study 3: Enhancing Decision-Making at a Financial Institution

A major bank revolutionized its decision-making processes through improved data quality testing:

- Previous situation: 25% of reports contained inconsistencies

- Strategic decisions delayed by data verification needs

- Regulatory compliance risks due to data quality issues

Implementation strategy:

- Deployed automated data quality testing frameworks

- Established real-time validation protocols

- Created comprehensive data quality dashboards

Outcomes:

- Reduced reporting errors to <2%

- Accelerated decision-making by 40%

- Achieved 100% regulatory compliance

- Saved $3.5M in audit-related costs

Transform Your Organization’s Approach to Data Quality?

Our Leadership Champion Training will equip you with the strategic knowledge to implement Six Sigma initiatives, select the right projects, and develop effective metrics for data quality improvement.

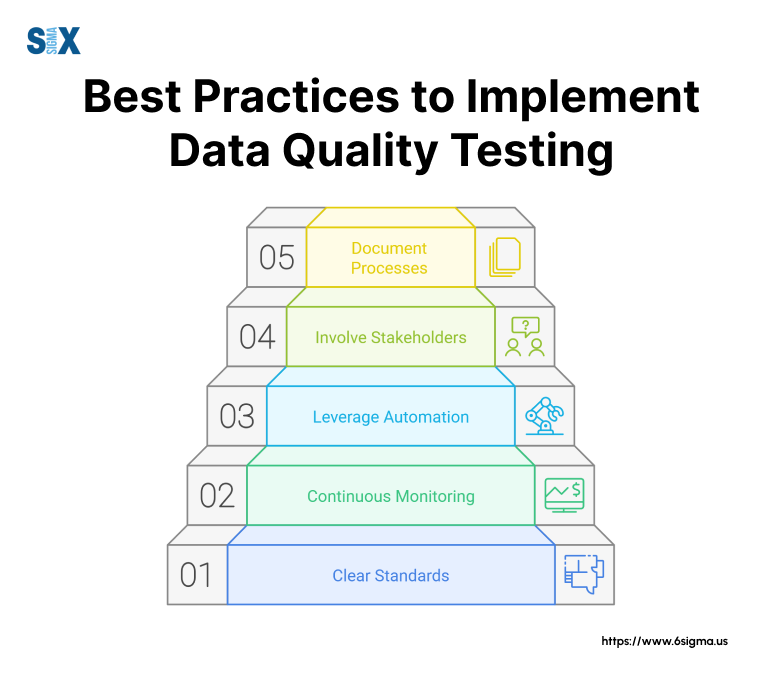

Best Practices for Implementing Data Quality Testing

A set of best practices can help you consistently deliver superior results. Let’s look at some proven strategies that have helped organizations achieve and maintain high data quality standards.

Establishing Clear Data Quality Standards

1. Define Measurable Quality Metrics

- Establish specific thresholds for accuracy

- Set clear completeness requirements

- Define timeliness parameters

- Document validation rules

2. Create Standard Operating Procedures

- Develop detailed testing protocols

- Establish quality control checkpoints

- Define escalation procedures

- Document exception handling processes

Implementing Continuous Monitoring and Testing

1. Regular Testing Cycles

- Schedule automated quality checks

- Implement real-time monitoring

- Conduct periodic manual reviews

- Establish feedback loops

2. Performance Tracking

- Monitor key quality indicators

- Track trend analysis

- Document improvement metrics

- Report regular status updates

Leveraging Automation for Efficiency

1. Strategic Tool Selection

- Choose appropriate testing tools

- Implement automated workflows

- Establish validation rules

- Configure alert mechanisms

2. Process Integration

- Align with existing workflows

- Integrate with data pipelines

- Enable automated reporting

- Implement corrective actions

Involving Stakeholders

1. Cross-functional Collaboration

- Engage business users

- Include technical teams

- Involve quality assurance

- Maintain executive support

2. Clear Communication Channels

- Regular status meetings

- Documented procedures

- Training programs

- Feedback mechanisms

Documenting and Communicating Processes

1. Process Documentation

- Detail testing procedures

- Document quality standards

- Maintain test cases

- Record validation rules

2. Communication Strategy

- Regular status updates

- Performance dashboards

- Issue tracking systems

- Success metrics reporting

Lead Data Quality Initiatives in Your Organization!

Our Six Sigma Green Belt certification covers everything from statistical process control to measurement system analysis. The course includes hands-on training with Minitab software to help you apply statistical methods effectively!

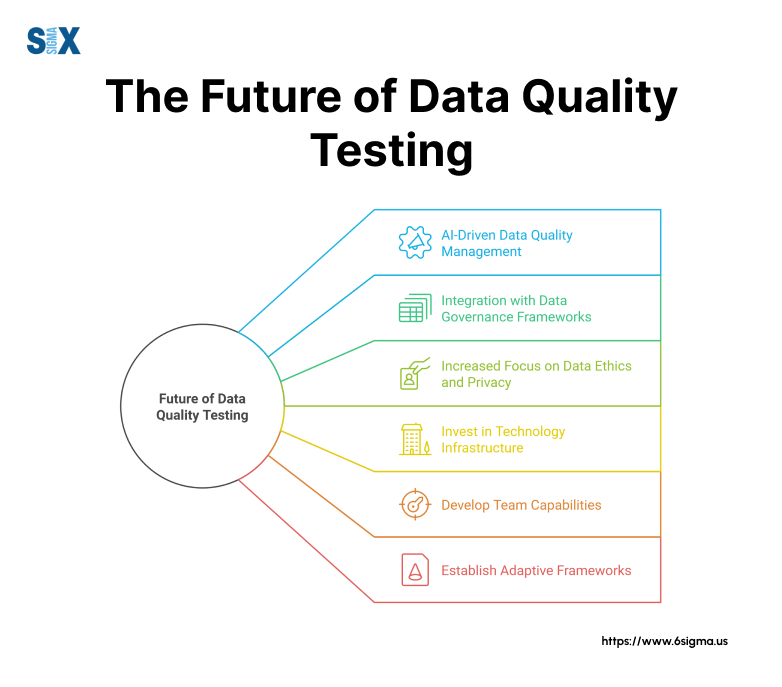

The Future of Data Quality Testing

We’re on the cusp of a revolutionary transformation. Let’s look at where data quality testing is headed.

Trends Shaping the Future of Data Quality

AI-Driven Data Quality Management

- Automated anomaly detection

- Predictive quality analytics

- Self-learning validation rules

- Real-time quality adjustments

Integration with Data Governance Frameworks

- Automated compliance monitoring

- Integrated quality metrics

- Real-time governance controls

- Dynamic policy enforcement

Increased Focus on Data Ethics and Privacy

- Ethical data handling

- Privacy-preserving testing methods

- Regulatory compliance automation

- Transparent quality processes

Preparing for Future Challenges

Organizations should:

Invest in Technology Infrastructure

- Upgrade testing capabilities

- Implement AI-ready platforms

- Enable real-time monitoring

- Build scalable solutions

Develop Team Capabilities

- Train in emerging technologies

- Build AI/ML expertise

- Foster ethical awareness

- Enhance analytical skills

Establish Adaptive Frameworks

- Create flexible testing protocols

- Implement agile methodologies

- Enable rapid adaptation

- Maintain continuous improvement

What’s Next: Efficiency Through Data Quality Testing

Data quality testing is now a business imperative that directly impacts your bottom line.

Remember that successful data quality testing requires:

- A comprehensive testing strategy aligned with business objectives

- Robust methodologies for validation and verification

- Appropriate tools and technologies

- Engaged stakeholders across all levels

- Continuous monitoring and improvement

Take the first step toward improving your data quality testing processes today. Start by assessing your current practices, identifying gaps, and developing a roadmap for improvement.

SixSigma.us offers both Live Virtual classes as well as Online Self-Paced training. Most option includes access to the same great Master Black Belt instructors that teach our World Class in-person sessions. Sign-up today!

Virtual Classroom Training Programs Self-Paced Online Training Programs