The Guide to Data Quality Assurance: Ensuring Accuracy and Reliability in Your Data

Maintaining high-quality data has become crucial for organizations seeking to make informed decisions and drive growth.

Data quality assurance serves as the backbone of reliable data management, ensuring that organizations can trust their data for critical business operations.

Key Highlights

Data Quality Principles And Standards

- Implementing Quality Assurance Frameworks

- Tools For Data Quality Management

- Future Trends In Quality Assurance

What is Data Quality Assurance?

Data quality assurance represents a systematic approach to verifying data accuracy, completeness, and reliability throughout its lifecycle.

This process involves monitoring, maintaining, and enhancing data quality through established protocols and standards.

Organizations implement data quality assurance to prevent errors, eliminate inconsistencies, and maintain data integrity across their systems.

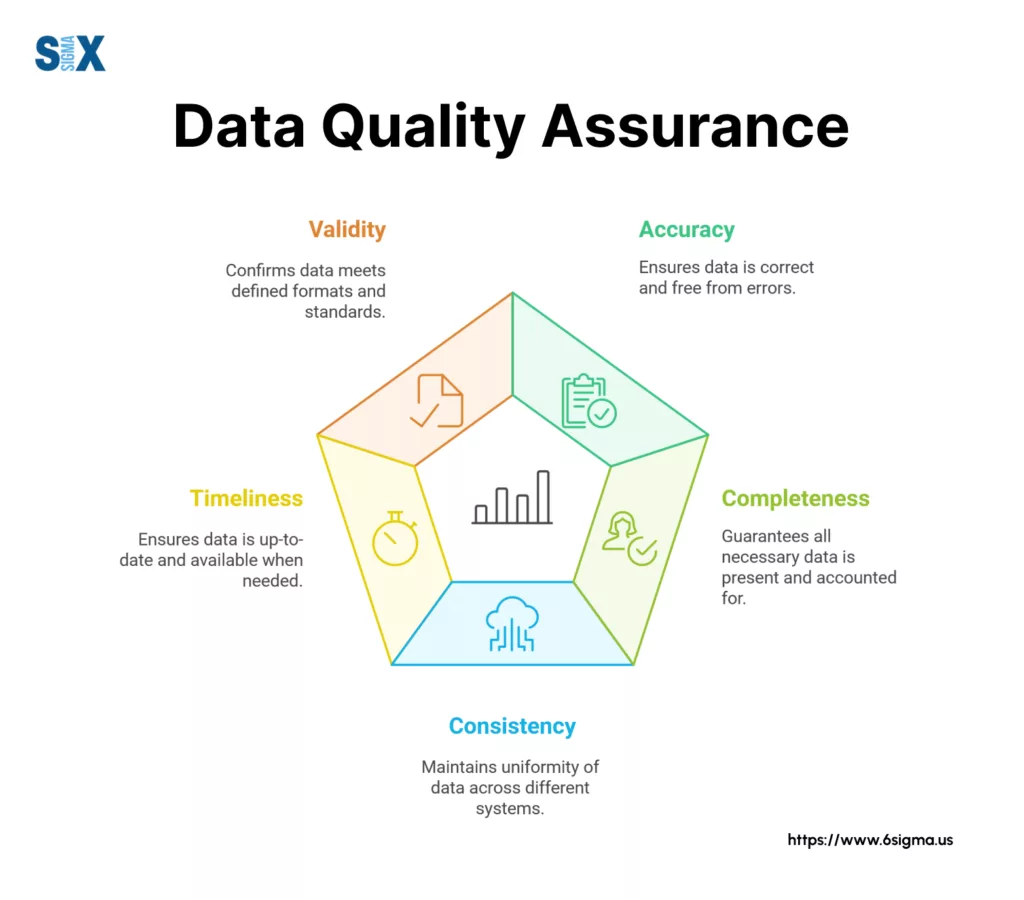

The Five Pillars Of Data Quality

The strength of any data quality assurance program rests on five essential pillars that guide its implementation and success.

Accuracy stands as the first pillar, ensuring that data reflects real-world conditions without errors or discrepancies. For example, customer addresses must match their actual locations to prevent delivery issues and maintain service quality.

Completeness forms the second pillar, requiring all necessary data fields to contain relevant information. Missing contact details or incomplete transaction records can severely impact business operations and customer relationships.

Consistency represents the third pillar, maintaining uniform data representation across different systems. When customer information varies between sales and support databases, it creates confusion and reduces operational efficiency.

Timeliness serves as the fourth pillar, ensuring data remains current and updated regularly. Outdated inventory numbers or pricing information can lead to poor business decisions and customer dissatisfaction.

Validity emerges as the fifth pillar, confirming that data meets defined business rules and formats. Phone numbers must follow standard patterns, and email addresses need proper structures to maintain data integrity.

Learn proven methods to evaluate and improve your data quality systems with our Measurement Systems Analysis course

Essential Components Of Data Quality Assurance

Data quality assurance requires several interconnected components working together to maintain high standards.

The data quality framework begins with clear policies that define quality standards and expectations. These policies guide the implementation of quality metrics and monitoring systems.

Data profiling tools analyze existing datasets to identify patterns and anomalies.

Regular audits verify compliance with established standards, while cleaning processes remove or correct problematic data entries.

Quality metrics provide measurable indicators of data health, enabling teams to track improvements and identify areas needing attention.

These metrics might include error rates, completeness scores, and update frequencies.

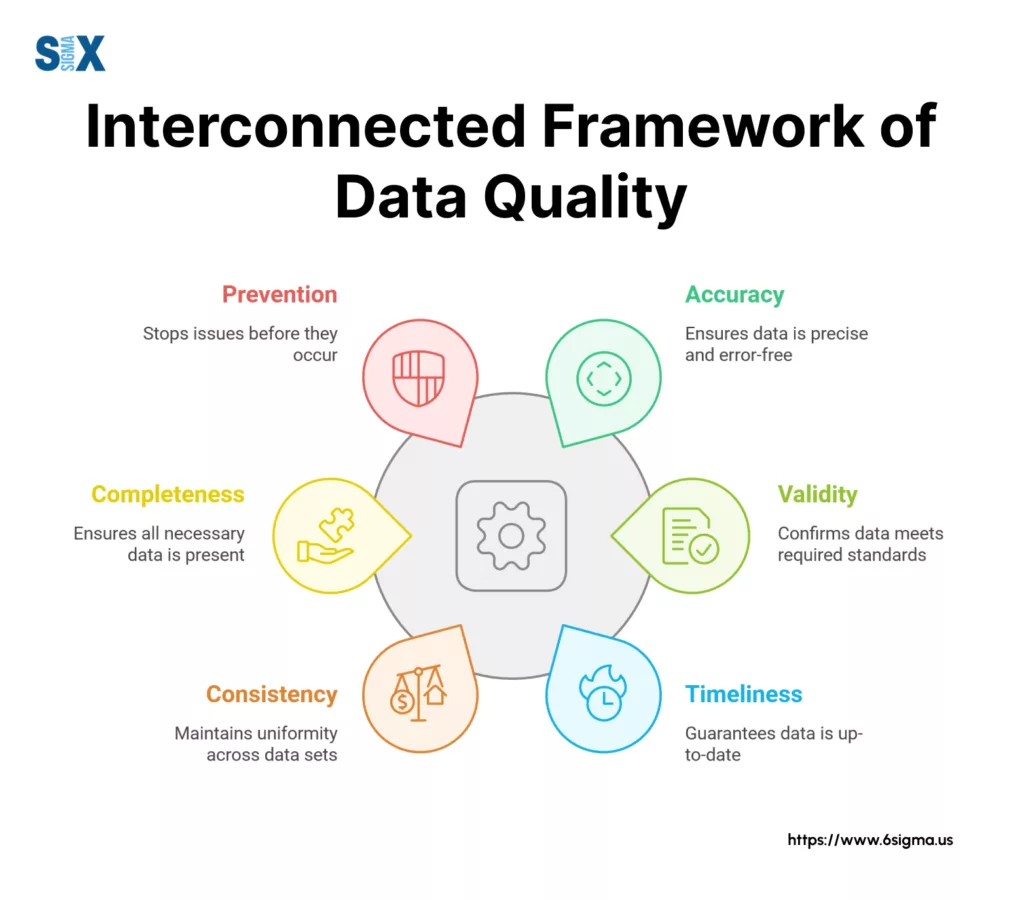

The Four Principles Of Quality Data Management

Successful data quality assurance follows four fundamental principles that guide its execution and maintenance.

- Prevention focuses on stopping data quality issues before they occur through proper validation and entry controls.

- Detection involves identifying existing problems through regular monitoring and analysis.

- Resolution establishes clear procedures for correcting identified issues and preventing their recurrence.

- Monitoring ensures ongoing oversight of data quality metrics and swift response to emerging problems.

These frameworks and principles create a structured approach to data quality assurance, helping organizations maintain reliable data for decision-making and operations.

As technology evolves, these foundational elements adapt to incorporate new tools and methodologies while maintaining their essential focus on data excellence.

The implementation of robust data quality metrics helps organizations track their progress and demonstrate the value of their quality assurance efforts.

These measurements provide tangible evidence of improvements and highlight areas requiring additional attention or resources.

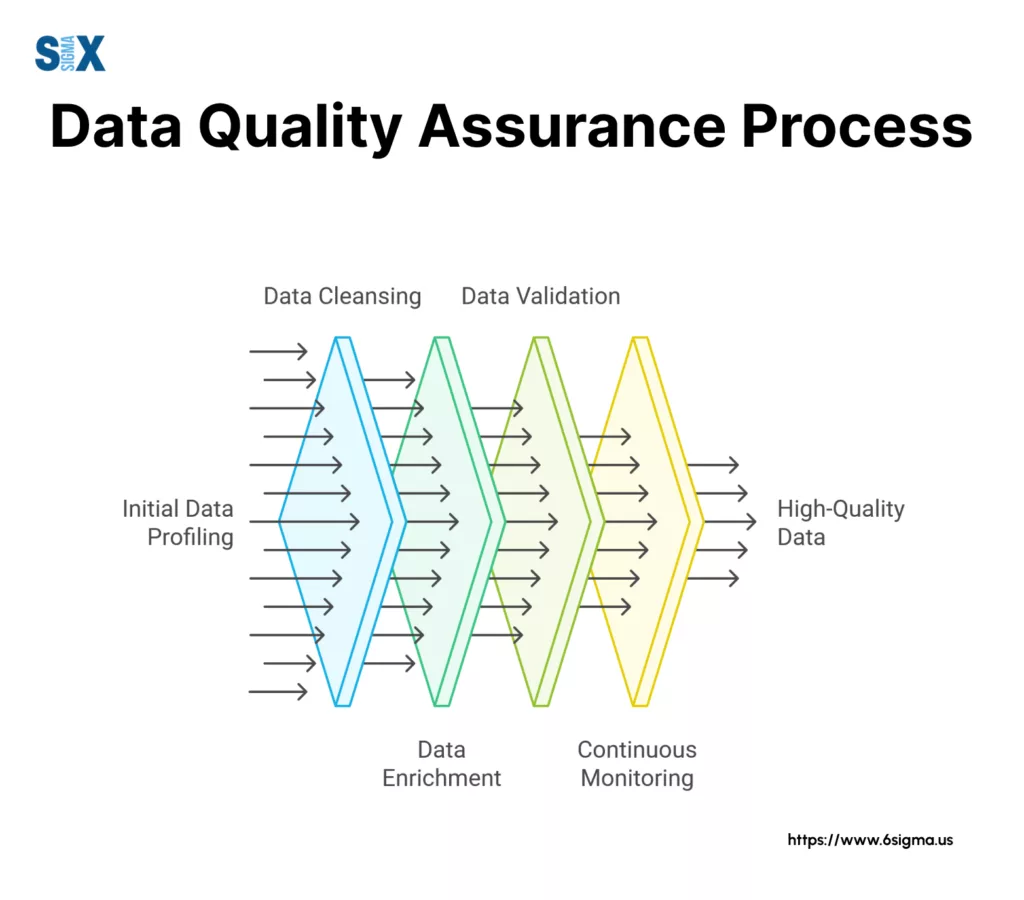

The Data Quality Assurance Process

A successful data quality assurance process requires systematic steps and proven methods to maintain data integrity.

Organizations that follow structured approaches achieve better results in their data management initiatives.

Essential Steps In The Quality Assurance Process

The data quality assurance process begins with data profiling, which examines existing datasets to understand their structure and content.

This initial assessment reveals patterns, relationships, and potential issues within the data.

Next comes data standardization, where organizations establish uniform formats and rules for data entry. For example, phone numbers follow a consistent pattern, and dates adhere to a single format across all systems.

Data validation represents the third crucial step, verifying that information meets established quality criteria. This includes checking for accuracy, completeness, and consistency across different data points.

The cleansing phase removes or corrects identified errors, duplicates, and inconsistencies. Modern data quality assurance tools automate many cleaning tasks, reducing manual effort while improving accuracy.

Finally, continuous monitoring ensures ongoing data quality through regular checks and assessments. This step helps organizations identify and address new issues before they impact business operations.

Advanced Data Quality Assurance Techniques

Modern data quality assurance techniques leverage technology to enhance efficiency and effectiveness. Automated validation rules catch errors during data entry, preventing poor-quality data from entering systems.

Pattern matching algorithms identify inconsistencies and anomalies across large datasets. These tools flag potential issues for review, enabling quick resolution of data quality problems.

Use powerful tools for data quality to create charts and perform data analysis with our Minitab Essentials program

Proven Methods For Quality Assurance

Statistical analysis methods help evaluate data quality by identifying outliers and unusual patterns.

These approaches provide quantitative measures of data reliability and highlight areas needing attention.

Cross-validation techniques compare data across different sources to ensure consistency.

When customer information varies between systems, these methods flag discrepancies for investigation and correction.

Regular auditing procedures verify compliance with quality standards and identify process improvements.

These assessments examine both the data itself and the procedures used to maintain its quality.

The integration of AI and machine learning continues to transform data quality assurance methods.

These technologies automate complex quality checks and predict potential issues before they occur. Cloud-based solutions enable real-time monitoring and correction across distributed systems.

Implementing A Data Quality Assurance Framework

A robust data quality assurance framework provides the structure needed to maintain data integrity across organizations.

This structured approach ensures consistent data quality while supporting business objectives and regulatory compliance.

Creating An Effective Quality Assurance Plan

The foundation of any data quality assurance plan starts with clear objectives aligned to business goals. Organizations must define specific, measurable targets for data quality improvement.

These targets might include reducing error rates, improving data completeness, or enhancing data accessibility.

Stakeholder involvement proves crucial during plan development.

IT managers need to coordinate with business leaders to understand operational requirements, while data analysts provide insights into technical feasibility.

This collaboration ensures the plan addresses both technical and business needs effectively.

Resource allocation forms another vital component of the planning process.

Organizations must determine the necessary tools, personnel, and training required to execute their data quality initiatives.

Essential Elements Of A Quality Assurance Checklist

A well-designed data quality assurance checklist serves as a practical tool for maintaining consistent standards.

The checklist should cover data validation rules, verification procedures, and quality metrics tracking.

Data entry validation represents the first checkpoint, ensuring information meets prescribed formats and standards before entering systems.

This includes field-level checks for completeness, format compliance, and logical consistency.

Regular data audits form the second checkpoint, verifying ongoing compliance with quality standards.

These audits should examine both automated and manual data handling processes to identify potential improvements.

Implement robust data validation procedures with our Measurement Systems Analysis program which provides hands-on experience

Selecting The Right Quality Assurance Tools

Modern data quality assurance tools offer varied capabilities to support different aspects of quality management.

Data profiling tools analyze existing datasets to identify patterns and anomalies, while cleansing tools automate error correction and standardization.

Integration capabilities play a key role in tool selection. Quality assurance tools must work seamlessly with existing data management systems and business applications.

Cloud-based solutions offer flexibility and scalability, particularly for organizations with distributed operations.

Monitoring and reporting features enable organizations to track quality metrics and demonstrate improvement over time.

The implementation timeline should follow a phased approach, starting with critical data elements and gradually expanding to cover all relevant datasets.

This method allows organizations to refine their processes and address challenges without overwhelming resources.

Best Practices In Data Quality Assurance

Successful data quality assurance requires tailored approaches that address specific industry needs while following proven methodologies.

Industry-Specific Quality Assurance Applications

Healthcare organizations prioritize patient data accuracy to ensure proper treatment and regulatory compliance.

Medical records demand strict validation procedures, while insurance claims require precise coding and documentation.

These institutions implement specialized data quality assurance protocols that protect sensitive information while maintaining accessibility for authorized personnel.

Financial services firms focus on transaction data integrity and customer information accuracy.

Banks employ real-time validation systems to prevent fraudulent activities and maintain regulatory compliance. Investment firms utilize advanced data quality tools to ensure accurate portfolio management and risk assessment.

Manufacturing companies emphasize supply chain data quality to optimize operations.

Quality assurance procedures monitor inventory levels, track production metrics, and validate supplier information. These systems help prevent costly disruptions while maintaining efficient operations.

Overcoming Common Quality Challenges

Data silos present significant obstacles to maintaining consistent quality standards.

Organizations overcome this challenge by implementing centralized data quality frameworks that ensure uniform standards across departments.

Regular audits verify compliance and identify areas needing improvement.

Legacy systems often struggle with modern data quality requirements.

Successful organizations bridge this gap through middleware solutions that add validation capabilities without replacing existing infrastructure. This approach balances modernization needs with budget constraints.

Manual data entry remains a common source of errors. Progressive organizations address this challenge through automated validation rules and user training programs.

Regular feedback loops help identify recurring issues and improve data entry accuracy over time.

Scale and complexity create unique challenges for large organizations. Cloud-based quality assurance tools provide scalable solutions that grow with organizational needs.

These platforms offer flexible deployment options while maintaining consistent quality standards across global operations.

Data quality degradation over time requires ongoing attention. Regular monitoring and maintenance procedures help organizations maintain high standards throughout the data lifecycle.

Automated alerts notify relevant personnel when quality metrics fall below acceptable thresholds.

Integration challenges between different systems can compromise data quality. Organizations address this issue through standardized data formats and robust integration protocols.

Regular testing ensures data moves accurately between systems while maintaining integrity.

Resource constraints often limit quality assurance efforts. Successful organizations prioritize critical data elements and implement phased approaches to improvement.

This strategy allows for meaningful progress within available resources while demonstrating value for additional investments.

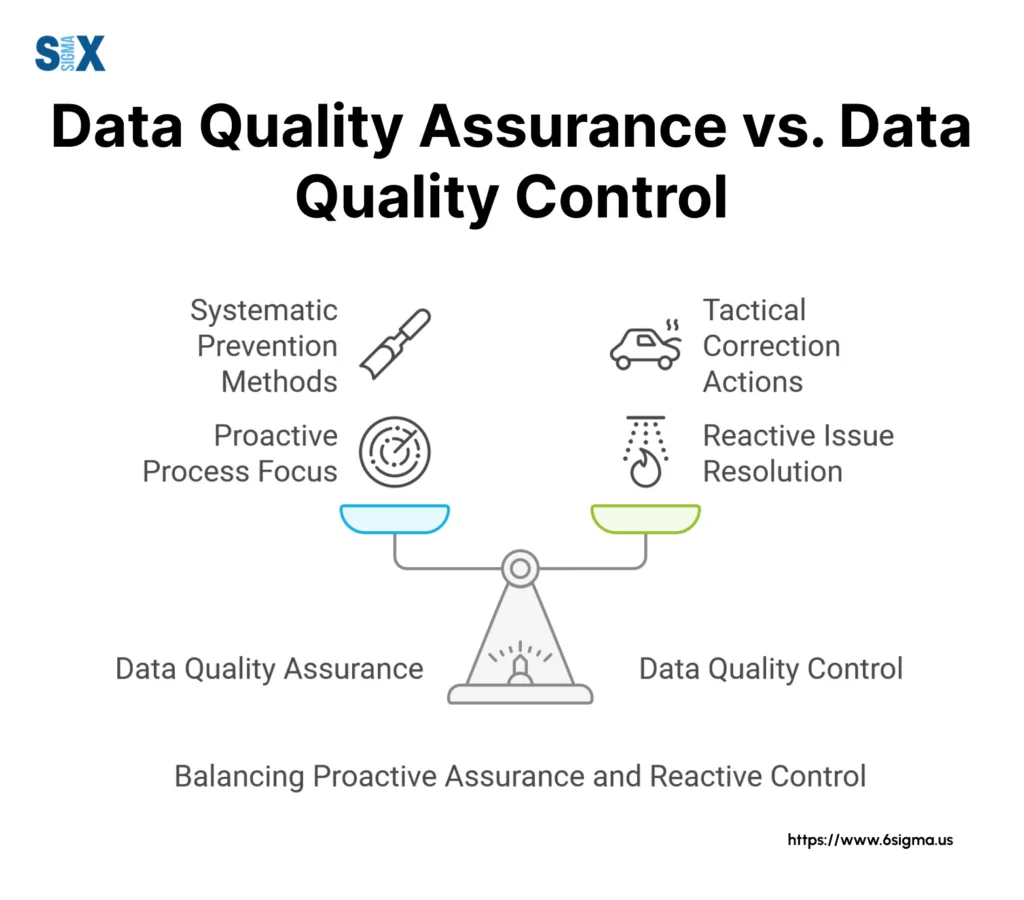

Data Quality Assurance vs. Data Quality Control

While often used interchangeably, data quality assurance and data quality control serve distinct purposes in maintaining data integrity.

Understanding these differences helps organizations implement effective data management strategies that leverage both approaches appropriately.

Key Distinctions In Approach And Timing

Data quality assurance focuses on preventing errors through systematic processes and protocols.

This proactive approach establishes standards, procedures, and checkpoints before data enters the system. Organizations implement these measures during the planning and design phases of data management initiatives.

Data quality control, however, emphasizes detecting and correcting errors in existing datasets. This reactive approach identifies issues through testing and verification after data collection.

Quality control teams examine data samples to ensure they meet established standards and specifications.

Operational Differences And Implementation

Quality assurance procedures typically involve creating standardized processes, training programs, and validation rules.

These measures ensure consistent data entry and maintenance across all organizational levels. Teams focus on building systems that prevent errors from occurring in the first place.

Quality control activities include regular audits, statistical sampling, and error detection processes. These operations identify specific issues within datasets that require correction.

Control teams concentrate on finding and fixing problems in existing data rather than preventing future occurrences.

Integration Of Both Approaches

Successful organizations integrate both quality assurance and control measures into their data management strategy.

Quality assurance establishes the framework and standards, while quality control verifies compliance and identifies areas for improvement.

This combined approach provides maximum protection against data quality issues.

Modern tools support both functions through automated validation rules and error detection capabilities.

AI-powered systems can predict potential quality issues before they occur while identifying existing problems that require attention.

Cloud-based platforms enable real-time monitoring across both preventive and corrective measures.

The evolution of data management practices continues to blur the lines between these approaches.

Automated systems now provide immediate feedback during data entry while simultaneously checking existing records for inconsistencies.

This convergence creates more efficient and effective quality management processes.

Industry requirements often dictate the balance between assurance and control measures.

Healthcare organizations might emphasize preventive measures due to strict regulatory requirements, while retail companies might focus more on identifying and correcting existing issues that affect customer service.

Resource allocation decisions must consider both aspects of quality management.

Organizations need to invest in preventive measures while maintaining sufficient resources for ongoing quality control activities.

This balanced approach ensures effective data quality management throughout the data lifecycle.

Performance metrics should track the effectiveness of both quality assurance and control efforts.

Organizations can measure prevention success through reduced error rates, while control effectiveness appears in improved error detection and correction statistics.

These measurements help justify continued investment in both approaches.

Conclusion

Data quality assurance stands as a critical component of modern business operations, enabling organizations to maintain reliable and actionable data assets.

The increasing complexity of data environments and growing regulatory requirements make robust quality assurance practices essential for business success.

Organizations that implement effective data quality assurance programs gain significant advantages in decision-making accuracy, operational efficiency, and customer satisfaction.

These benefits extend across industries, from healthcare and finance to manufacturing and retail, demonstrating the universal importance of maintaining high-quality data.

As technology continues to evolve, data quality assurance practices must adapt to new challenges and opportunities.

The integration of AI, cloud computing, and automated tools will reshape how organizations approach data quality management, creating more efficient and effective processes for maintaining data integrity.

Frequently Asked Questions

A. Data QA represents the systematic process of verifying data accuracy, completeness, and reliability. This process includes establishing standards, implementing validation procedures, and monitoring data quality throughout its lifecycle. Quality assurance teams work to prevent errors while ensuring data meets business requirements and regulatory standards.

A. Conducting data quality assurance involves several key steps. Organizations must first establish clear quality standards and metrics. They then implement validation rules, automated checks, and monitoring systems to maintain these standards. Regular audits and reviews ensure ongoing compliance while identifying areas for improvement.

A. Data quality assessment requires examining multiple aspects of data integrity. Organizations evaluate accuracy by comparing data against trusted sources, measure completeness through field-level analysis, and verify consistency across different systems. Advanced tools automate many assessment tasks while providing detailed quality metrics.

A. Ensuring data quality assurance requires a multi-faceted approach. Organizations must implement robust validation procedures, provide adequate staff training, and utilize appropriate quality management tools. Regular monitoring and maintenance activities help maintain quality standards over time.

A. A Data QA professional specializes in maintaining data quality through various technical and procedural methods. These specialists develop and implement quality standards, monitor data integrity, and work with stakeholders to resolve quality issues. They play a crucial role in ensuring data reliability for business operations.

A. The five pillars of data quality consist of accuracy, completeness, consistency, timeliness, and validity. Accuracy ensures data correctly represents real-world values. Completeness verifies all required information exists. Consistency maintains uniform data representation across systems. Timeliness ensures data remains current. Validity confirms data meets defined business rules and formats.

SixSigma.us offers both Live Virtual classes as well as Online Self-Paced training. Most option includes access to the same great Master Black Belt instructors that teach our World Class in-person sessions. Sign-up today!

Virtual Classroom Training Programs Self-Paced Online Training Programs